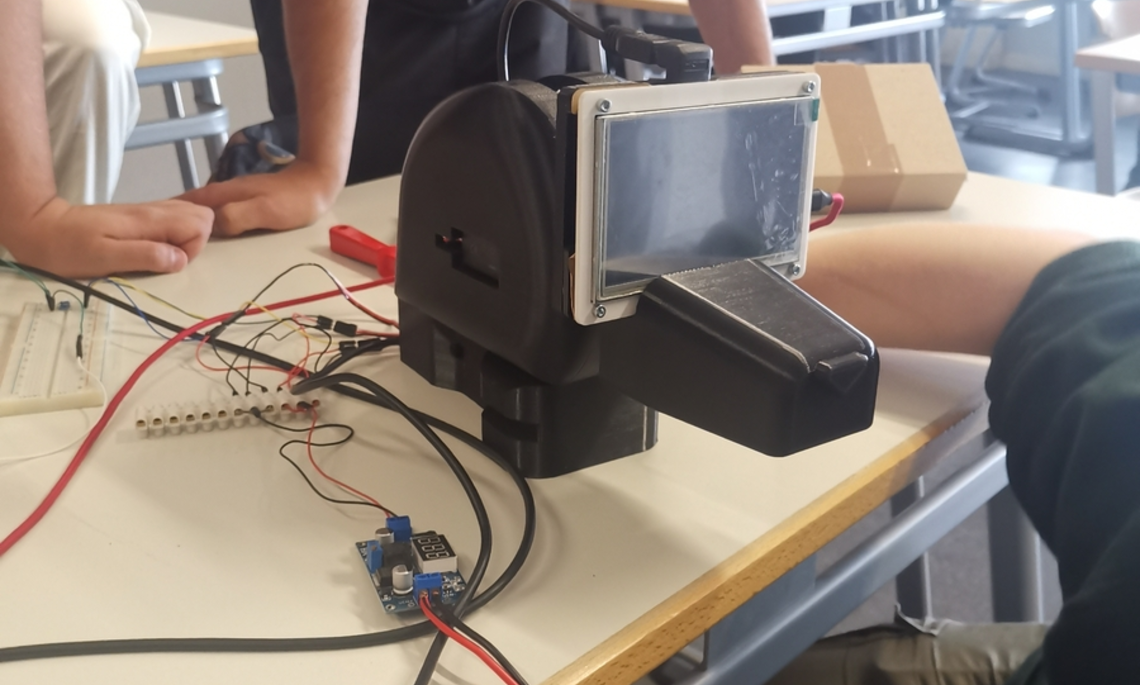

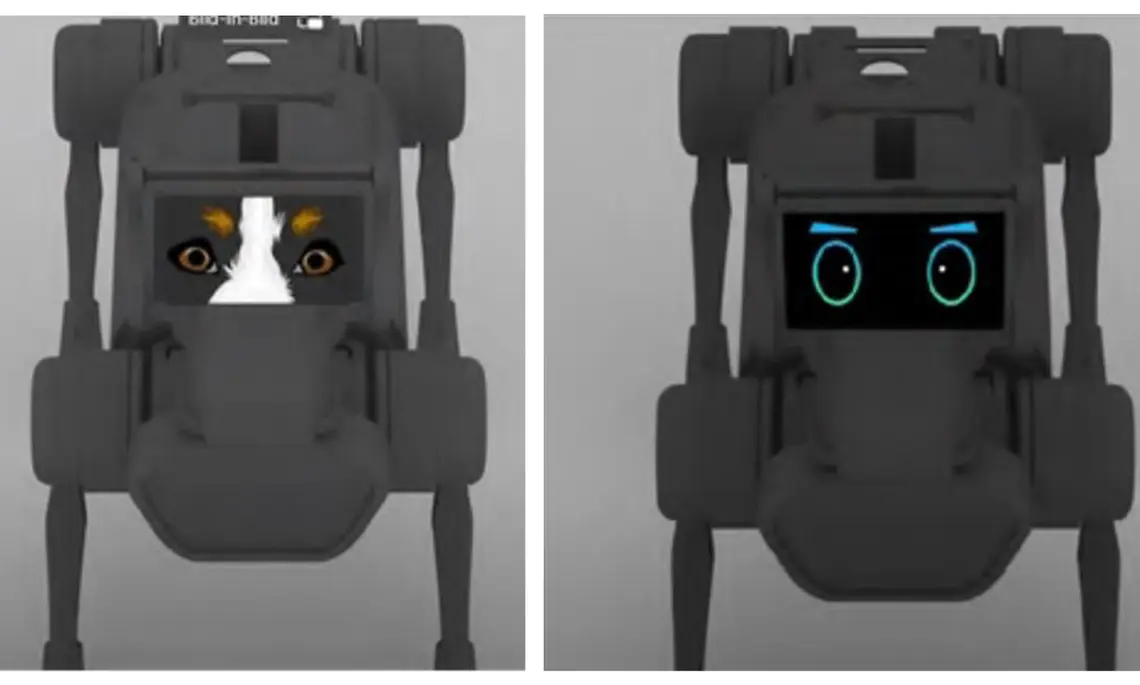

The interdisciplinary project "Empathy with aN AutonoMOUs Robot" (ENAMOUR) in the summer semester of 2022 focused on the development of an empathic robot. Christian Anghelide from AININ gGmbH put it aptly at the final presentation: "We (AININ) brought a robot and got a (robot) dog back".

A total of 35 students from 4 different degree programs (Artificial Intelligence, UX Design, Computer Science and Mechanical Engineering) were involved in the further development of the robot dog. Prof. Dr. Gerhard Elsbacher, Prof. Dr. Stefan Kugele, Prof. Dr. Munir Georges and Mariano Frohnmaier were responsible for supervising the 4 study groups.

Goal

The robot dog "Spike" should be able to correctly interpret emotions from voice commands given by its owner and respond emotionally, e.g. through appropriate body language, facial expressions and noises.

![[Translate to English:] Studierendengruppe, Betreuer und Roboterhund](/fileadmin/_processed_/a/csm_roboterhund_spike_donaukurier_234e3215e9.webp)

![[Translate to English:] Logo Akkreditierungsrat: Systemakkreditiert](/fileadmin/_processed_/2/8/csm_AR-Siegel_Systemakkreditierung_bc4ea3377d.webp)

![[Translate to English:] Logo IHK Ausbildungsbetrieb 2023](/fileadmin/_processed_/6/csm_IHK_Ausbildungsbetrieb_digital_2023_6850f47537.webp)